Comparing Popular 5-Gram Carts: Which One Reigns Supreme?

As the cannabis market continues to evolve, 5-gram carts have emerged as a preferred option for heavy users and value-seekers alike. These high-capacity cartridges offer […]

Youth Basketball Excellence: arming European Expertise Young Athletes with

Our Youth Basketball Program is committed to developing young talent and encouraging personal development by means of basketball. Serving young athletes between the ages of […]

Revell Ace Hardware: Top Lawn Care Products for a Pristine Yard

Transform your lawn into a lush paradise with Revell Ace Hardware’s exceptional range of lawn care products. Whether you’re battling weeds, nourishing your plants, or […]

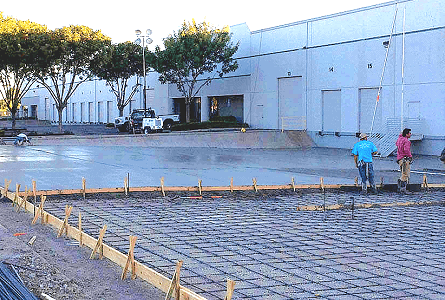

Innovative Concrete Solutions for Sloped Areas with Retaining Wall Installation

Both homeowners and builders can find great difficulties in sloped locations. Safety, stability, and aesthetic appeal all depend on good planning and sensible solutions. Retaining […]

Mold Matters: Control of the Menace of Mold Spores for Improved Health

Extended contact with mold spores can have major negative effects on the body and the psyche. Many people are unaware of the harm these microscopic […]

The Benefits of Implementing SEO for Business Success

Search engine optimization (SEO) is absolutely vital. To generate traffic, enhance user experience, and boost income, companies in all kinds of fields are increasingly turning […]

Unique Ideas to Spice Up Your Next Scavenger Hunt Adventure

Treasure hunts are a terrific and fascinating opportunity to discover, compete, and value the big outdoors or maybe inside surroundings. They are also a classic […]

Supporting Little Smiles: Children’s Dental Mouthguards

Safety of their child is the primary worry of any parent; thus, in sports and play, injury is always a possibility. Dental injury is one […]

Locked Out? Vancouver Residential Locksmiths Awavers are ready to assist!

Getting locked out of your house may be a frustrating and embarrassing event. Whether you broke a lock, lost your keys, or unintentionally locked yourself […]

Concrete Contractors in Venice: Trusted Experts for Your Projects

When it comes to major construction or renovation projects, hiring a reliable and skilled concrete contractor is crucial for ensuring quality results. Whether your intended […]